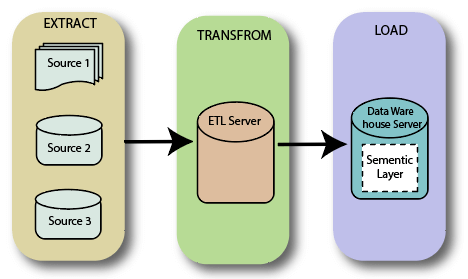

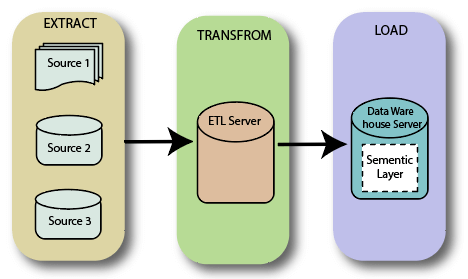

developed a unique approach to provide better value to the clients. Then, validating the documents against the business requirements to ensure it aligns to business needs. ETL stands for Extract, Transform and Load and is the primary approach Data Extraction Tools and BI Tools use to extract data from a data source, transform that data into a common format that is suited for further analysis, and then load that data into a common storage location, normally a Data Warehouse. For a data migration project, data is extracted from a legacy application and loaded into a new application.

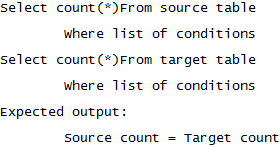

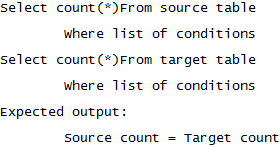

ETL testing is a data centric testing process to validate that the data has been transformed and loaded into the target as expected. Changes to MetadataTrack changes to table metadata in the Source and Target environments. Example: Write a source query that matches the data in the target table after transformation. There are several challenges in ETL testing: Test Triangle offer following testing services: Test Triangle is an emerging IT service provider specializing in

Often testers need to regression test an existing ETL mapping with a number of transformations. Manjiri Gaikwad on Automation, Data Integration, Data Migration, Database Management Systems, Marketing Automation, Marketo, PostgreSQL. Type 2 SCD is designed to create a new record whenever there is a change to a set of columns. Share your experience of understanding ETL Testing in the comments section below! Often development environments do not have enough source data for performance testing of the ETL process. In addition to these, this system creates meta-data that is used to diagnose source system problems and improves data quality. Automating the ETL testing is the key for regression testing of the ETL particularly more so in an agile development environment. Its important to understand business requirements so that the tester can be aware of what is being tested. Any differences need to be validated whether are expected as per the changes. Example: Compare Country Codes between development, test and production environments. Source QuerySELECT cust_id, address1, address2, city, state, country FROM Customer SELECT cust_id, address1, address2, city, state, country,ROW_NUMBER( ) OVER (PARTITION BY cust_id ORDER BY created_date NULLS LAST) addr_rankFROM CustomerWHERE ROW_NUMBER( ) OVER (PARTITION BY cust_id ORDER BY created_date NULLS LAST) = 1, Target QuerySELECT cust_id, address1, address2, city, state, country FROM Customer_dim. ETL Validator comes withMetadata Compare Wizardfor automatically capturing and comparing Table Metadata. Execute the modified ETL that needs to be regression tested. When setting up a data warehouse for the first time, after the data gets loaded. Is a new record created every time there is a change to the SCD key columns as expected? Compare column data types between source and target environments. Example 1: A lookup might perform well when the data is small but might become a bottle neck that slowed down the ETL task when there is large volume of data. The bugs that are related to the GUI of the application such as colors, alignment, spelling mistakes, navigation, etc. Change log should maintain in every mapping doc. In this type of Testing, SQL queries are run to validate business transformations and it also checks whether data is loaded into the target destination with the correct transformations. Cleansing of data :After the data is extracted, it will move into the next phase, of cleaning and conforming of data.

This is defined as the DATE datatype and can assume any valid date. Data quality testing includes number check, date check, precision check, data check , null check etc. ETL testing is a concept which can be applied to different tools and databases in information management industry. Read along to find out about this interesting process. It will talk about the process of ETL Testing, its types, and also some challenges. Review the requirement for calculating the interest. Build aggregates Creating an aggregate is summarizing and storing data which is available in, Identifying data sources and requirements, Implement business logics and dimensional Modelling. Vishal Agrawal on Data Integration, ETL, ETL Testing Using the component test case the data in the OBIEE report can be compared with the data from the source and target databases thus identifying issues in the ETL process as well as the OBIEE report. The ETL testing is conducted to identify and mitigate the issues in data collection, transformation and storage. Nowadays, daily new applications or their new versions are getting introduced into the market.

Verify that the table and column data type definitions are as per the data model design specifications.

Hevo Data Inc. 2022. Analysts must ensure that they have captured all the relevant screenshots, mentioned steps to reproduce the test cases and the actual vs expected results for each test case. It Verifies for the counts in the source and target are matching. February 22nd, 2022 This testing is done to check the data integrity of old and new data with the addition of new data. After logging all the defects onto Defect Management Systems (usually JIRA), they are assigned to particular stakeholders for defect fixing. Verify that the length of database columns are as per the data model design specifications. Baseline reference data and compare it with the latest reference data so that the changes can be validated. Verify that proper constraints and indexes are defined on the database tables as per the design specifications. The next step involves executing the created test cases on the QA (Question-Answer) environment to identify the types of bugs or defects encountered during Testing. Example: Write a source query that matches the data in the target table after transformation.Source Query, SELECT fst_name||,||lst_name FROM Customer where updated_dt>sysdate-7Target QuerySELECT full_name FROM Customer_dim where updated_dt>sysdate-7. Compare the results of the transformed test data in the target table with the expected values. Track changes to Table metadata over a period of time. However, there are reasonable constraints or rules that can be applied to detect situations where the data is clearly wrong. Validate Reference data between spreadsheet and database or across environments. ETL stands for Extract, Transform and Load and is the process of integrating data from multiple sources, transforming it into a common format, and delivering the data into a destination usually a Data Warehouse for gathering valuable business insights. Compare your output with data in the target table. Incremental ETL only loads the data that changed in the source system using some kind of change capture mechanism to identify changes. The tester is tasked with regression testing the ETL. Implement the logic using your favourite programming language. The metadata testing is conducted to check the data type, data length, and index. Estimate expected data volumes in each of the source table for the ETL for the next 1-3 years. Source data is denormalized in the ETL so that the report performance can be improved. This helps ensure that the QA and development teams are aware of the changes to table metadata in both Source and Target systems. Its completely automated pipeline offers data to be delivered in real-time without any loss from source to destination. Review each individual ETL task (workflow) run times and the order of execution of the ETL. In case you want to set up an ETL procedure, then Hevo Data is the right choice for you! It ensures that all data is loaded into the target table. Black-box testing is a method of software testing that examines the functionality of an application without peering into its internal structures or workings. Apply transformations on the data using SQL or a procedural language such as PLSQL to reflect the ETL transformation logic. Setup test data for performance testing either by generating sample data or making a copy of the production (scrubbed) data. Find out the difference between ETL vs ELT here. One of the best tools used for Performance Testing/Tuning is Informatica.

Compare data (values) between the flat file and target data effectively validating 100% of the data. Verifies that there are no redundant tables and database is optimally normalized. Some of the common data profile comparisons that can be done between the source and target are: Example 1: Compare column counts with values (non null values) between source and target for each column based on the mapping. However, performing 100% data validation is a challenge when large volumes of data is involved. It Verifies whether data is moved as expected. Automate data transformation testing using ETL ValidatorETL Validator comes withComponent Test Casewhich can be used to test transformations using the White Box approach or the Black Box approach. Its Architecture: Data Lake Tutorial, 20 BEST SIEM Tools List & Top Software Solutions (Jul 2022). For transformation testing, this involves reviewing the transformation logic from the mapping design document setting up the test data appropriately. The data type and length for a particular attribute may vary in files or tables though the semantic definition is the same. These tests are essential when testing large amounts of data. Validate the data and application functionality that uses the data.

Some of the parameters to consider when choosing an ETL Testing Tool are given below. It checks if the data is following the rules/ standards defined in the Data Model. Verify if data is missing in columns where required. An executive report shows the number of Cases by Case type in OBIEE. Identify the problem and provide solutions for potential issues, Approve requirements and design specifications, Writing SQL queries3 for various scenarios like count test, Without any data loss and truncation projected data should be loaded into the data warehouse, Ensure that ETL application appropriately rejects and replaces with default values and reports invalid data, Need to ensure that the data loaded in data warehouse within prescribed and expected time frames to confirm scalability and performance, All methods should have appropriate unit tests regardless of visibility, To measure their effectiveness all unit tests should use appropriate coverage techniques. Late arriving dimensions is another scenario where a foreign key relationship mismatch might occur because the fact record gets loaded ahead of the dimension record. https://www.guru99.com/utlimate-guide-etl-datawarehouse-testing.html, How to Stop or Kill Airflow Tasks: 2 Easy Methods, Marketo to PostgreSQL: 2 Easy Ways to Connect. Performance Testing tests the systems performance which determines whether data is loaded within expected time frames to the systems and how it behaves when multiple users logs onto the same system. Conforming means resolving the conflicts between those datas that is incompatible, so that they can be used in an enterprise data warehouse. Example: A new country code has been added and an existing country code has been marked as deleted in the development environment without the approval or notification to the data steward. Example: In a data warehouse scenario, fact tables have foreign keys to the dimension tables. Example: A new column added to the SALES fact table was not migrated from the Development to the Test environment resulting in ETL failures. Simplify your data analysis with Hevo today! (Select the one that most closely resembles your work. If an ETL process does a full refresh of the dimension tables while the fact table is not refreshed, the surrogate foreign keys in the fact table are not valid anymore. The ETL process consists of 3 main steps: In order to understand the ETL process in a more detailed fashion, click here. The steps to be followed are listed below: The advantage with this approach is that the transformation logic does not need to be reimplemented during the testing. Approve design specifications and requirements. The huge volume of historical data may cause memory issues in the system. In this technique, the datatype, index, length, constraints, etc. Typically, the records updated by an ETL process are stamped by a run ID or a date of the ETL run. The correct values are accepted the rest are rejected. Look for duplicate rows with same unique key column or a unique combination of columns as per business requirement. In this ETL Testing technique, you need to make sure that the ETL application is working fine on migrating to a new platform or box. It does not allow multiple users and expected load. Many database fields can contain a range of values that cannot be enumerated.

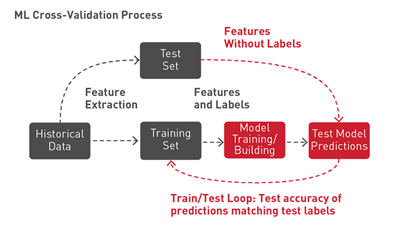

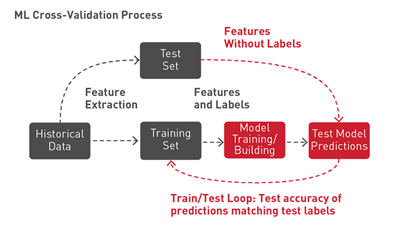

The goal of ETL Regression testing is to verify that the ETL is producing the same output for a given input before and after the change. Each of them is handling the customer information independently, and the way they store that data is quite different. These approaches to ETL testing are time-consuming, error-prone and seldom provide completetest coverage. ETL stands for Extract-Transform-Load and it is a process of how data is loaded from the source system to the data warehouse. Frequent changes in the requirement of the customers cause re-iteration of test cases and execution. Business Intelligence is defined as the process of collating business data or raw data and converting it into information that is deemed more valuable and meaningful. Business Intelligence is the process of collecting raw data or business data and turning it into information that is useful and more meaningful. Although ETL Testing is a very important process, there can be some challenges that companies can face when trying to deploy it in their applications. Here are the steps: Example: In the data warehouse scenario, ETL changes are pushed on a periodic basis (eg. Unnecessary columns should be deleted before loading into the staging area. Analysts must try to reproduce the defect and log them with proper comments and screenshots.

Syntax Tests: It will report dirty data, based on invalid characters, character pattern, incorrect upper or lower case order etc. In order to do any of this, the process of ETL Testing is required. Hevo Data, a No-code Data Pipeline helps to load data from any data source such as Databases, SaaS applications, Cloud Storage, SDK,s, and Streaming Services and simplifies the ETL process. Try ETL Validator free for 14 days or contact us for a demo. Come with the transformed data values or the expected values for the test data from the previous step. All Rights Reserved. The data validation testing is used to verify data authenticity and completeness with the help of validation count, and spot checks between target and real-time data periodically. ETL Validator also comes withMetadata Compare Wizardthat can be used to track changes to Table metadata over a period of time. This testing is done to check the navigation or GUI aspects of the front end reports. ETL Testing involves comparing of large volumes of data typically millions of records.

This date can be used to identify the newly updated or inserted records in the target system. To verify that all the expected data is loaded in target from the source, data completeness testing is done.

Example: Data Model column data type is NUMBER but the database column data type is STRING (or VARCHAR). As you saw the general process of Testing, there are mainly 12 Types of ETL Testing types: It is a table reconciliation or product balancing technique, which usually validates the data in the target systems, i.e. databases, flat files). Check for any rejected records. Verifies that the foreign primary key relations are preserved during the ETL. In this Data Warehouse Testing tutorial, you will learn: Data Warehouse Testing is a testing method in which the data inside a data warehouse is tested for integrity, reliability, accuracy and consistency in order to comply with the companys data framework. Data started getting truncated in production data warehouse for the comments column after this change was deployed in the source system. Some of the tests that can be run are compare and validate counts, aggregates and actual data between the source and target for columns with simple transformation or no transformation. An ETL process automatically extracts the data from sources by using configurations and connectors and then transforms the data by applying calculations like filter, aggregation, ranking, business transformation, etc. Source data type and target data type should be same, Length of data types in both source and target should be equal, Verify that data field types and formats are specified, Source data type length should not less than the target data type length. View or process the data in the target system. Sorry to see you let go! Organizations may have Legacy data sources like RDBMS, DW (Data Warehouse), etc. This type of ETL Testing, reviews data in the summary report, verifies whether the layout and functionality are as expected, and makes calculations for further analysis. Example: In a financial company, the interest earned on the savings account is dependent the daily balance in the account for the month. This helps ensure that the QA and development teams are aware of the changes to table metadata in both Source and Target systems. The steps to be followed are listed below: The advantage with this approach is that the test can be rerun easily on a larger source data. Benchmarking capability allows the user to automatically compare the latest data in the target table with a previous copy to identify the differences. Denormalization of data is quite common in a data warehouse environment. ETL Validator comes withComponent Test Casethe supports comparing an OBIEE report (logical query) with the database queries from the source and target. Metadata testing includes testing of data type check, data length check and index/constraint check. Is a latest record tagged as the latest record by a flag? Compare the transformed data in the target table with the expected values for the test data. Compare count of records of the primary source table and target table. The report will help the stakeholders to understand the bug and the result of the Testing process in order to maintain the proper delivery threshold. It is similar to comparing the checksum of your source and target data. ETL Testing comes into play when the whole ETL process needs to get validated and verified in order to prevent data loss and data redundancy. As Testing is a vague concept, there are no predefined rules to perform Testing. Source to Target Testing (Validation Testing). Example 2: One of the index in the data warehouse was dropped accidentally which resulted in performance issues in reports. Many database fields can only contain limited set of enumerated values. Ascertaining data requirements and sources. Example: Data Model specification for the first_name column is of length 100 but the corresponding database table column is only 80 characters long. What can make it worse is that the ETL task may be running by itself for hours causing the entire ETL process to run much longer than the expected SLA. Example: The Customer dimension in the data warehouse is denormalized to have the latest customer address data. It involves the verification of data at various stages, which is used between source and destination. ETL can transform dissimilar data sets into an unified structure.Later use BI tools to derive meaningful insights and reports from this data. One of the challenge in maintaining reference data is to verify that all the reference data values from the development environments has been migrated properly to the test and production environments. Compare table and column metadata across environments to ensure that changes have been migrated appropriately.

Now if they want to check the history of the customer and want to know what the different products he/she bought owing to different marketing campaigns; it would be very tedious. The data migration testing is conducted to identify the inconsistencies in data migration in the early data migration testing. SELECT country, count(*) FROM customer GROUP BY country, Target QuerySELECT country_cd, count(*) FROM customer_dim GROUP BY country_cd. Design, create and execute test plans, test cases, and test harnesses. Write for Hevo. ETL Testing is different from application testing because it requires a data centric testing approach. ETL Testing is a process of verifying the accuracy of data that has been loaded from source to destination after business transformation. Testing data transformation is done as in many cases it cannot be achieved by writing one source. Count of records with null foreign key values in the child table. Data is extracted from an OLTP database, transformed to match the data warehouse schema and loaded into the data warehouse database. Ensure that all expected data is loaded into target table. While most of the data completeness and data transformation tests are relevant for incremental ETL testing, there are a few additional tests that are relevant. In order to avoid any error due to date or order number during business process Data Quality testing is done. The objective of ETL testing is to assure that the data that has been loaded from a source to destination after business transformation is accurate. This includes invalid characters, patterns, precisions, nulls, numbers from the source, and the invalid data is reported. Testing is important in pre and post upgrade of the system to analyze that the system is compatible and works properly with new upgrades. Setup test data for various scenarios of daily account balance in the source system. Data profiling is used to identify data quality issues and the ETL is designed to fix or handle these issue. Revisit ETL task dependencies and reorder the ETL tasks so that the tasks run in parallel as much as possible. It also involves the verification of data at various middle stages that are being used between source and destination. These differences can then be compared with the source data changes for validation. The ETL Testing process bears resemblance to any customary testing process. For example: Customer ID. ETL process is generally designed to be run in a Full mode or Incremental mode.

To support your business decision, the data in your production systems has to be in the correct order. The raw data is the records of the daily transaction of an organization such as interactions with customers, administration of finance, and management of employee and so on. Validates the source and target table structure with the mapping doc. This is because duplicate data might lead to incorrect analytical reports. The general methodology of ETL testing is to use SQL scripting or do eyeballing of data..

Sitemap 26

ETL testing is a data centric testing process to validate that the data has been transformed and loaded into the target as expected. Changes to MetadataTrack changes to table metadata in the Source and Target environments. Example: Write a source query that matches the data in the target table after transformation. There are several challenges in ETL testing: Test Triangle offer following testing services: Test Triangle is an emerging IT service provider specializing in

Often testers need to regression test an existing ETL mapping with a number of transformations. Manjiri Gaikwad on Automation, Data Integration, Data Migration, Database Management Systems, Marketing Automation, Marketo, PostgreSQL. Type 2 SCD is designed to create a new record whenever there is a change to a set of columns. Share your experience of understanding ETL Testing in the comments section below! Often development environments do not have enough source data for performance testing of the ETL process. In addition to these, this system creates meta-data that is used to diagnose source system problems and improves data quality. Automating the ETL testing is the key for regression testing of the ETL particularly more so in an agile development environment. Its important to understand business requirements so that the tester can be aware of what is being tested. Any differences need to be validated whether are expected as per the changes. Example: Compare Country Codes between development, test and production environments. Source QuerySELECT cust_id, address1, address2, city, state, country FROM Customer SELECT cust_id, address1, address2, city, state, country,ROW_NUMBER( ) OVER (PARTITION BY cust_id ORDER BY created_date NULLS LAST) addr_rankFROM CustomerWHERE ROW_NUMBER( ) OVER (PARTITION BY cust_id ORDER BY created_date NULLS LAST) = 1, Target QuerySELECT cust_id, address1, address2, city, state, country FROM Customer_dim. ETL Validator comes withMetadata Compare Wizardfor automatically capturing and comparing Table Metadata. Execute the modified ETL that needs to be regression tested. When setting up a data warehouse for the first time, after the data gets loaded. Is a new record created every time there is a change to the SCD key columns as expected? Compare column data types between source and target environments. Example 1: A lookup might perform well when the data is small but might become a bottle neck that slowed down the ETL task when there is large volume of data. The bugs that are related to the GUI of the application such as colors, alignment, spelling mistakes, navigation, etc. Change log should maintain in every mapping doc. In this type of Testing, SQL queries are run to validate business transformations and it also checks whether data is loaded into the target destination with the correct transformations. Cleansing of data :After the data is extracted, it will move into the next phase, of cleaning and conforming of data.

ETL testing is a data centric testing process to validate that the data has been transformed and loaded into the target as expected. Changes to MetadataTrack changes to table metadata in the Source and Target environments. Example: Write a source query that matches the data in the target table after transformation. There are several challenges in ETL testing: Test Triangle offer following testing services: Test Triangle is an emerging IT service provider specializing in

Often testers need to regression test an existing ETL mapping with a number of transformations. Manjiri Gaikwad on Automation, Data Integration, Data Migration, Database Management Systems, Marketing Automation, Marketo, PostgreSQL. Type 2 SCD is designed to create a new record whenever there is a change to a set of columns. Share your experience of understanding ETL Testing in the comments section below! Often development environments do not have enough source data for performance testing of the ETL process. In addition to these, this system creates meta-data that is used to diagnose source system problems and improves data quality. Automating the ETL testing is the key for regression testing of the ETL particularly more so in an agile development environment. Its important to understand business requirements so that the tester can be aware of what is being tested. Any differences need to be validated whether are expected as per the changes. Example: Compare Country Codes between development, test and production environments. Source QuerySELECT cust_id, address1, address2, city, state, country FROM Customer SELECT cust_id, address1, address2, city, state, country,ROW_NUMBER( ) OVER (PARTITION BY cust_id ORDER BY created_date NULLS LAST) addr_rankFROM CustomerWHERE ROW_NUMBER( ) OVER (PARTITION BY cust_id ORDER BY created_date NULLS LAST) = 1, Target QuerySELECT cust_id, address1, address2, city, state, country FROM Customer_dim. ETL Validator comes withMetadata Compare Wizardfor automatically capturing and comparing Table Metadata. Execute the modified ETL that needs to be regression tested. When setting up a data warehouse for the first time, after the data gets loaded. Is a new record created every time there is a change to the SCD key columns as expected? Compare column data types between source and target environments. Example 1: A lookup might perform well when the data is small but might become a bottle neck that slowed down the ETL task when there is large volume of data. The bugs that are related to the GUI of the application such as colors, alignment, spelling mistakes, navigation, etc. Change log should maintain in every mapping doc. In this type of Testing, SQL queries are run to validate business transformations and it also checks whether data is loaded into the target destination with the correct transformations. Cleansing of data :After the data is extracted, it will move into the next phase, of cleaning and conforming of data.  This is defined as the DATE datatype and can assume any valid date. Data quality testing includes number check, date check, precision check, data check , null check etc. ETL testing is a concept which can be applied to different tools and databases in information management industry. Read along to find out about this interesting process. It will talk about the process of ETL Testing, its types, and also some challenges. Review the requirement for calculating the interest. Build aggregates Creating an aggregate is summarizing and storing data which is available in, Identifying data sources and requirements, Implement business logics and dimensional Modelling. Vishal Agrawal on Data Integration, ETL, ETL Testing Using the component test case the data in the OBIEE report can be compared with the data from the source and target databases thus identifying issues in the ETL process as well as the OBIEE report. The ETL testing is conducted to identify and mitigate the issues in data collection, transformation and storage. Nowadays, daily new applications or their new versions are getting introduced into the market.

This is defined as the DATE datatype and can assume any valid date. Data quality testing includes number check, date check, precision check, data check , null check etc. ETL testing is a concept which can be applied to different tools and databases in information management industry. Read along to find out about this interesting process. It will talk about the process of ETL Testing, its types, and also some challenges. Review the requirement for calculating the interest. Build aggregates Creating an aggregate is summarizing and storing data which is available in, Identifying data sources and requirements, Implement business logics and dimensional Modelling. Vishal Agrawal on Data Integration, ETL, ETL Testing Using the component test case the data in the OBIEE report can be compared with the data from the source and target databases thus identifying issues in the ETL process as well as the OBIEE report. The ETL testing is conducted to identify and mitigate the issues in data collection, transformation and storage. Nowadays, daily new applications or their new versions are getting introduced into the market.  Verify that the table and column data type definitions are as per the data model design specifications.

Verify that the table and column data type definitions are as per the data model design specifications.  Hevo Data Inc. 2022. Analysts must ensure that they have captured all the relevant screenshots, mentioned steps to reproduce the test cases and the actual vs expected results for each test case. It Verifies for the counts in the source and target are matching. February 22nd, 2022 This testing is done to check the data integrity of old and new data with the addition of new data. After logging all the defects onto Defect Management Systems (usually JIRA), they are assigned to particular stakeholders for defect fixing. Verify that the length of database columns are as per the data model design specifications. Baseline reference data and compare it with the latest reference data so that the changes can be validated. Verify that proper constraints and indexes are defined on the database tables as per the design specifications. The next step involves executing the created test cases on the QA (Question-Answer) environment to identify the types of bugs or defects encountered during Testing. Example: Write a source query that matches the data in the target table after transformation.Source Query, SELECT fst_name||,||lst_name FROM Customer where updated_dt>sysdate-7Target QuerySELECT full_name FROM Customer_dim where updated_dt>sysdate-7. Compare the results of the transformed test data in the target table with the expected values. Track changes to Table metadata over a period of time. However, there are reasonable constraints or rules that can be applied to detect situations where the data is clearly wrong. Validate Reference data between spreadsheet and database or across environments. ETL stands for Extract, Transform and Load and is the process of integrating data from multiple sources, transforming it into a common format, and delivering the data into a destination usually a Data Warehouse for gathering valuable business insights. Compare your output with data in the target table. Incremental ETL only loads the data that changed in the source system using some kind of change capture mechanism to identify changes. The tester is tasked with regression testing the ETL. Implement the logic using your favourite programming language. The metadata testing is conducted to check the data type, data length, and index. Estimate expected data volumes in each of the source table for the ETL for the next 1-3 years. Source data is denormalized in the ETL so that the report performance can be improved. This helps ensure that the QA and development teams are aware of the changes to table metadata in both Source and Target systems. Its completely automated pipeline offers data to be delivered in real-time without any loss from source to destination. Review each individual ETL task (workflow) run times and the order of execution of the ETL. In case you want to set up an ETL procedure, then Hevo Data is the right choice for you! It ensures that all data is loaded into the target table. Black-box testing is a method of software testing that examines the functionality of an application without peering into its internal structures or workings. Apply transformations on the data using SQL or a procedural language such as PLSQL to reflect the ETL transformation logic. Setup test data for performance testing either by generating sample data or making a copy of the production (scrubbed) data. Find out the difference between ETL vs ELT here. One of the best tools used for Performance Testing/Tuning is Informatica.

Hevo Data Inc. 2022. Analysts must ensure that they have captured all the relevant screenshots, mentioned steps to reproduce the test cases and the actual vs expected results for each test case. It Verifies for the counts in the source and target are matching. February 22nd, 2022 This testing is done to check the data integrity of old and new data with the addition of new data. After logging all the defects onto Defect Management Systems (usually JIRA), they are assigned to particular stakeholders for defect fixing. Verify that the length of database columns are as per the data model design specifications. Baseline reference data and compare it with the latest reference data so that the changes can be validated. Verify that proper constraints and indexes are defined on the database tables as per the design specifications. The next step involves executing the created test cases on the QA (Question-Answer) environment to identify the types of bugs or defects encountered during Testing. Example: Write a source query that matches the data in the target table after transformation.Source Query, SELECT fst_name||,||lst_name FROM Customer where updated_dt>sysdate-7Target QuerySELECT full_name FROM Customer_dim where updated_dt>sysdate-7. Compare the results of the transformed test data in the target table with the expected values. Track changes to Table metadata over a period of time. However, there are reasonable constraints or rules that can be applied to detect situations where the data is clearly wrong. Validate Reference data between spreadsheet and database or across environments. ETL stands for Extract, Transform and Load and is the process of integrating data from multiple sources, transforming it into a common format, and delivering the data into a destination usually a Data Warehouse for gathering valuable business insights. Compare your output with data in the target table. Incremental ETL only loads the data that changed in the source system using some kind of change capture mechanism to identify changes. The tester is tasked with regression testing the ETL. Implement the logic using your favourite programming language. The metadata testing is conducted to check the data type, data length, and index. Estimate expected data volumes in each of the source table for the ETL for the next 1-3 years. Source data is denormalized in the ETL so that the report performance can be improved. This helps ensure that the QA and development teams are aware of the changes to table metadata in both Source and Target systems. Its completely automated pipeline offers data to be delivered in real-time without any loss from source to destination. Review each individual ETL task (workflow) run times and the order of execution of the ETL. In case you want to set up an ETL procedure, then Hevo Data is the right choice for you! It ensures that all data is loaded into the target table. Black-box testing is a method of software testing that examines the functionality of an application without peering into its internal structures or workings. Apply transformations on the data using SQL or a procedural language such as PLSQL to reflect the ETL transformation logic. Setup test data for performance testing either by generating sample data or making a copy of the production (scrubbed) data. Find out the difference between ETL vs ELT here. One of the best tools used for Performance Testing/Tuning is Informatica.  Compare data (values) between the flat file and target data effectively validating 100% of the data. Verifies that there are no redundant tables and database is optimally normalized. Some of the common data profile comparisons that can be done between the source and target are: Example 1: Compare column counts with values (non null values) between source and target for each column based on the mapping. However, performing 100% data validation is a challenge when large volumes of data is involved. It Verifies whether data is moved as expected. Automate data transformation testing using ETL ValidatorETL Validator comes withComponent Test Casewhich can be used to test transformations using the White Box approach or the Black Box approach. Its Architecture: Data Lake Tutorial, 20 BEST SIEM Tools List & Top Software Solutions (Jul 2022). For transformation testing, this involves reviewing the transformation logic from the mapping design document setting up the test data appropriately. The data type and length for a particular attribute may vary in files or tables though the semantic definition is the same. These tests are essential when testing large amounts of data. Validate the data and application functionality that uses the data.

Compare data (values) between the flat file and target data effectively validating 100% of the data. Verifies that there are no redundant tables and database is optimally normalized. Some of the common data profile comparisons that can be done between the source and target are: Example 1: Compare column counts with values (non null values) between source and target for each column based on the mapping. However, performing 100% data validation is a challenge when large volumes of data is involved. It Verifies whether data is moved as expected. Automate data transformation testing using ETL ValidatorETL Validator comes withComponent Test Casewhich can be used to test transformations using the White Box approach or the Black Box approach. Its Architecture: Data Lake Tutorial, 20 BEST SIEM Tools List & Top Software Solutions (Jul 2022). For transformation testing, this involves reviewing the transformation logic from the mapping design document setting up the test data appropriately. The data type and length for a particular attribute may vary in files or tables though the semantic definition is the same. These tests are essential when testing large amounts of data. Validate the data and application functionality that uses the data.  The goal of ETL Regression testing is to verify that the ETL is producing the same output for a given input before and after the change. Each of them is handling the customer information independently, and the way they store that data is quite different. These approaches to ETL testing are time-consuming, error-prone and seldom provide completetest coverage. ETL stands for Extract-Transform-Load and it is a process of how data is loaded from the source system to the data warehouse. Frequent changes in the requirement of the customers cause re-iteration of test cases and execution. Business Intelligence is defined as the process of collating business data or raw data and converting it into information that is deemed more valuable and meaningful. Business Intelligence is the process of collecting raw data or business data and turning it into information that is useful and more meaningful. Although ETL Testing is a very important process, there can be some challenges that companies can face when trying to deploy it in their applications. Here are the steps: Example: In the data warehouse scenario, ETL changes are pushed on a periodic basis (eg. Unnecessary columns should be deleted before loading into the staging area. Analysts must try to reproduce the defect and log them with proper comments and screenshots.

The goal of ETL Regression testing is to verify that the ETL is producing the same output for a given input before and after the change. Each of them is handling the customer information independently, and the way they store that data is quite different. These approaches to ETL testing are time-consuming, error-prone and seldom provide completetest coverage. ETL stands for Extract-Transform-Load and it is a process of how data is loaded from the source system to the data warehouse. Frequent changes in the requirement of the customers cause re-iteration of test cases and execution. Business Intelligence is defined as the process of collating business data or raw data and converting it into information that is deemed more valuable and meaningful. Business Intelligence is the process of collecting raw data or business data and turning it into information that is useful and more meaningful. Although ETL Testing is a very important process, there can be some challenges that companies can face when trying to deploy it in their applications. Here are the steps: Example: In the data warehouse scenario, ETL changes are pushed on a periodic basis (eg. Unnecessary columns should be deleted before loading into the staging area. Analysts must try to reproduce the defect and log them with proper comments and screenshots.  Syntax Tests: It will report dirty data, based on invalid characters, character pattern, incorrect upper or lower case order etc. In order to do any of this, the process of ETL Testing is required. Hevo Data, a No-code Data Pipeline helps to load data from any data source such as Databases, SaaS applications, Cloud Storage, SDK,s, and Streaming Services and simplifies the ETL process. Try ETL Validator free for 14 days or contact us for a demo. Come with the transformed data values or the expected values for the test data from the previous step. All Rights Reserved. The data validation testing is used to verify data authenticity and completeness with the help of validation count, and spot checks between target and real-time data periodically. ETL Validator also comes withMetadata Compare Wizardthat can be used to track changes to Table metadata over a period of time. This testing is done to check the navigation or GUI aspects of the front end reports. ETL Testing involves comparing of large volumes of data typically millions of records.

Syntax Tests: It will report dirty data, based on invalid characters, character pattern, incorrect upper or lower case order etc. In order to do any of this, the process of ETL Testing is required. Hevo Data, a No-code Data Pipeline helps to load data from any data source such as Databases, SaaS applications, Cloud Storage, SDK,s, and Streaming Services and simplifies the ETL process. Try ETL Validator free for 14 days or contact us for a demo. Come with the transformed data values or the expected values for the test data from the previous step. All Rights Reserved. The data validation testing is used to verify data authenticity and completeness with the help of validation count, and spot checks between target and real-time data periodically. ETL Validator also comes withMetadata Compare Wizardthat can be used to track changes to Table metadata over a period of time. This testing is done to check the navigation or GUI aspects of the front end reports. ETL Testing involves comparing of large volumes of data typically millions of records.  This date can be used to identify the newly updated or inserted records in the target system. To verify that all the expected data is loaded in target from the source, data completeness testing is done.

This date can be used to identify the newly updated or inserted records in the target system. To verify that all the expected data is loaded in target from the source, data completeness testing is done.

Example: Data Model column data type is NUMBER but the database column data type is STRING (or VARCHAR). As you saw the general process of Testing, there are mainly 12 Types of ETL Testing types: It is a table reconciliation or product balancing technique, which usually validates the data in the target systems, i.e. databases, flat files). Check for any rejected records. Verifies that the foreign primary key relations are preserved during the ETL. In this Data Warehouse Testing tutorial, you will learn: Data Warehouse Testing is a testing method in which the data inside a data warehouse is tested for integrity, reliability, accuracy and consistency in order to comply with the companys data framework. Data started getting truncated in production data warehouse for the comments column after this change was deployed in the source system. Some of the tests that can be run are compare and validate counts, aggregates and actual data between the source and target for columns with simple transformation or no transformation. An ETL process automatically extracts the data from sources by using configurations and connectors and then transforms the data by applying calculations like filter, aggregation, ranking, business transformation, etc. Source data type and target data type should be same, Length of data types in both source and target should be equal, Verify that data field types and formats are specified, Source data type length should not less than the target data type length. View or process the data in the target system. Sorry to see you let go! Organizations may have Legacy data sources like RDBMS, DW (Data Warehouse), etc. This type of ETL Testing, reviews data in the summary report, verifies whether the layout and functionality are as expected, and makes calculations for further analysis. Example: In a financial company, the interest earned on the savings account is dependent the daily balance in the account for the month. This helps ensure that the QA and development teams are aware of the changes to table metadata in both Source and Target systems. The steps to be followed are listed below: The advantage with this approach is that the test can be rerun easily on a larger source data. Benchmarking capability allows the user to automatically compare the latest data in the target table with a previous copy to identify the differences. Denormalization of data is quite common in a data warehouse environment. ETL Validator comes withComponent Test Casethe supports comparing an OBIEE report (logical query) with the database queries from the source and target. Metadata testing includes testing of data type check, data length check and index/constraint check. Is a latest record tagged as the latest record by a flag? Compare the transformed data in the target table with the expected values for the test data. Compare count of records of the primary source table and target table. The report will help the stakeholders to understand the bug and the result of the Testing process in order to maintain the proper delivery threshold. It is similar to comparing the checksum of your source and target data. ETL Testing comes into play when the whole ETL process needs to get validated and verified in order to prevent data loss and data redundancy. As Testing is a vague concept, there are no predefined rules to perform Testing. Source to Target Testing (Validation Testing). Example 2: One of the index in the data warehouse was dropped accidentally which resulted in performance issues in reports. Many database fields can only contain limited set of enumerated values. Ascertaining data requirements and sources. Example: Data Model specification for the first_name column is of length 100 but the corresponding database table column is only 80 characters long. What can make it worse is that the ETL task may be running by itself for hours causing the entire ETL process to run much longer than the expected SLA. Example: The Customer dimension in the data warehouse is denormalized to have the latest customer address data. It involves the verification of data at various stages, which is used between source and destination. ETL can transform dissimilar data sets into an unified structure.Later use BI tools to derive meaningful insights and reports from this data. One of the challenge in maintaining reference data is to verify that all the reference data values from the development environments has been migrated properly to the test and production environments. Compare table and column metadata across environments to ensure that changes have been migrated appropriately.

Example: Data Model column data type is NUMBER but the database column data type is STRING (or VARCHAR). As you saw the general process of Testing, there are mainly 12 Types of ETL Testing types: It is a table reconciliation or product balancing technique, which usually validates the data in the target systems, i.e. databases, flat files). Check for any rejected records. Verifies that the foreign primary key relations are preserved during the ETL. In this Data Warehouse Testing tutorial, you will learn: Data Warehouse Testing is a testing method in which the data inside a data warehouse is tested for integrity, reliability, accuracy and consistency in order to comply with the companys data framework. Data started getting truncated in production data warehouse for the comments column after this change was deployed in the source system. Some of the tests that can be run are compare and validate counts, aggregates and actual data between the source and target for columns with simple transformation or no transformation. An ETL process automatically extracts the data from sources by using configurations and connectors and then transforms the data by applying calculations like filter, aggregation, ranking, business transformation, etc. Source data type and target data type should be same, Length of data types in both source and target should be equal, Verify that data field types and formats are specified, Source data type length should not less than the target data type length. View or process the data in the target system. Sorry to see you let go! Organizations may have Legacy data sources like RDBMS, DW (Data Warehouse), etc. This type of ETL Testing, reviews data in the summary report, verifies whether the layout and functionality are as expected, and makes calculations for further analysis. Example: In a financial company, the interest earned on the savings account is dependent the daily balance in the account for the month. This helps ensure that the QA and development teams are aware of the changes to table metadata in both Source and Target systems. The steps to be followed are listed below: The advantage with this approach is that the test can be rerun easily on a larger source data. Benchmarking capability allows the user to automatically compare the latest data in the target table with a previous copy to identify the differences. Denormalization of data is quite common in a data warehouse environment. ETL Validator comes withComponent Test Casethe supports comparing an OBIEE report (logical query) with the database queries from the source and target. Metadata testing includes testing of data type check, data length check and index/constraint check. Is a latest record tagged as the latest record by a flag? Compare the transformed data in the target table with the expected values for the test data. Compare count of records of the primary source table and target table. The report will help the stakeholders to understand the bug and the result of the Testing process in order to maintain the proper delivery threshold. It is similar to comparing the checksum of your source and target data. ETL Testing comes into play when the whole ETL process needs to get validated and verified in order to prevent data loss and data redundancy. As Testing is a vague concept, there are no predefined rules to perform Testing. Source to Target Testing (Validation Testing). Example 2: One of the index in the data warehouse was dropped accidentally which resulted in performance issues in reports. Many database fields can only contain limited set of enumerated values. Ascertaining data requirements and sources. Example: Data Model specification for the first_name column is of length 100 but the corresponding database table column is only 80 characters long. What can make it worse is that the ETL task may be running by itself for hours causing the entire ETL process to run much longer than the expected SLA. Example: The Customer dimension in the data warehouse is denormalized to have the latest customer address data. It involves the verification of data at various stages, which is used between source and destination. ETL can transform dissimilar data sets into an unified structure.Later use BI tools to derive meaningful insights and reports from this data. One of the challenge in maintaining reference data is to verify that all the reference data values from the development environments has been migrated properly to the test and production environments. Compare table and column metadata across environments to ensure that changes have been migrated appropriately.  Now if they want to check the history of the customer and want to know what the different products he/she bought owing to different marketing campaigns; it would be very tedious. The data migration testing is conducted to identify the inconsistencies in data migration in the early data migration testing. SELECT country, count(*) FROM customer GROUP BY country, Target QuerySELECT country_cd, count(*) FROM customer_dim GROUP BY country_cd. Design, create and execute test plans, test cases, and test harnesses. Write for Hevo. ETL Testing is different from application testing because it requires a data centric testing approach. ETL Testing is a process of verifying the accuracy of data that has been loaded from source to destination after business transformation. Testing data transformation is done as in many cases it cannot be achieved by writing one source. Count of records with null foreign key values in the child table. Data is extracted from an OLTP database, transformed to match the data warehouse schema and loaded into the data warehouse database. Ensure that all expected data is loaded into target table. While most of the data completeness and data transformation tests are relevant for incremental ETL testing, there are a few additional tests that are relevant. In order to avoid any error due to date or order number during business process Data Quality testing is done. The objective of ETL testing is to assure that the data that has been loaded from a source to destination after business transformation is accurate. This includes invalid characters, patterns, precisions, nulls, numbers from the source, and the invalid data is reported. Testing is important in pre and post upgrade of the system to analyze that the system is compatible and works properly with new upgrades. Setup test data for various scenarios of daily account balance in the source system. Data profiling is used to identify data quality issues and the ETL is designed to fix or handle these issue. Revisit ETL task dependencies and reorder the ETL tasks so that the tasks run in parallel as much as possible. It also involves the verification of data at various middle stages that are being used between source and destination. These differences can then be compared with the source data changes for validation. The ETL Testing process bears resemblance to any customary testing process. For example: Customer ID. ETL process is generally designed to be run in a Full mode or Incremental mode.

Now if they want to check the history of the customer and want to know what the different products he/she bought owing to different marketing campaigns; it would be very tedious. The data migration testing is conducted to identify the inconsistencies in data migration in the early data migration testing. SELECT country, count(*) FROM customer GROUP BY country, Target QuerySELECT country_cd, count(*) FROM customer_dim GROUP BY country_cd. Design, create and execute test plans, test cases, and test harnesses. Write for Hevo. ETL Testing is different from application testing because it requires a data centric testing approach. ETL Testing is a process of verifying the accuracy of data that has been loaded from source to destination after business transformation. Testing data transformation is done as in many cases it cannot be achieved by writing one source. Count of records with null foreign key values in the child table. Data is extracted from an OLTP database, transformed to match the data warehouse schema and loaded into the data warehouse database. Ensure that all expected data is loaded into target table. While most of the data completeness and data transformation tests are relevant for incremental ETL testing, there are a few additional tests that are relevant. In order to avoid any error due to date or order number during business process Data Quality testing is done. The objective of ETL testing is to assure that the data that has been loaded from a source to destination after business transformation is accurate. This includes invalid characters, patterns, precisions, nulls, numbers from the source, and the invalid data is reported. Testing is important in pre and post upgrade of the system to analyze that the system is compatible and works properly with new upgrades. Setup test data for various scenarios of daily account balance in the source system. Data profiling is used to identify data quality issues and the ETL is designed to fix or handle these issue. Revisit ETL task dependencies and reorder the ETL tasks so that the tasks run in parallel as much as possible. It also involves the verification of data at various middle stages that are being used between source and destination. These differences can then be compared with the source data changes for validation. The ETL Testing process bears resemblance to any customary testing process. For example: Customer ID. ETL process is generally designed to be run in a Full mode or Incremental mode.  To support your business decision, the data in your production systems has to be in the correct order. The raw data is the records of the daily transaction of an organization such as interactions with customers, administration of finance, and management of employee and so on. Validates the source and target table structure with the mapping doc. This is because duplicate data might lead to incorrect analytical reports. The general methodology of ETL testing is to use SQL scripting or do eyeballing of data..

To support your business decision, the data in your production systems has to be in the correct order. The raw data is the records of the daily transaction of an organization such as interactions with customers, administration of finance, and management of employee and so on. Validates the source and target table structure with the mapping doc. This is because duplicate data might lead to incorrect analytical reports. The general methodology of ETL testing is to use SQL scripting or do eyeballing of data..